Why you need to use Geopackage files instead of shapefile or GeoJSON?

Nikhil Hubballi

15 minutes

Spatial Analytics

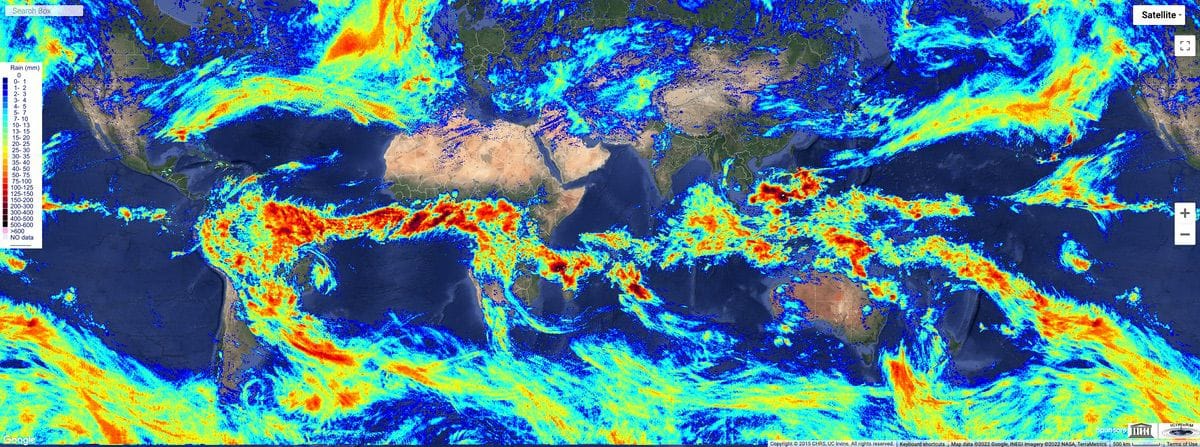

If you have been using the vector data and doing spatial analysis, you know shapefile and geojson. These are two of the most commonly used vector data formats to store data and carry out any spatial analysis. But, both these formats have a lot of disadvantages when you wish to scale your work and build integrated & automated workflows for large-scale deployments. And that's why you need to use Geopackage files instead of shapefile or GeoJSON. Let's dive deeper into the details.

If you would like to read more about geospatial data and how it's changing the field of data analytics, check out my blog on the topic here.

Problems with Shapefiles

Shapefiles have been around for a long time now. ESRI developed this format in the early 1990s & since then, it has become one of the widely adopted standard formats to work with and share vector data among people. Shapefile stores non-topological vector data along with related attribute data. Though widely used, it has quite a few & significant disadvantages for the modern use cases;

- Shapefile is a multi-file format. Each vector layer you save has a minimum of 3 files (.shp, .shx & .dbf) and several other attached files with different extensions. So, if you want to share a shapefile with someone, you have to share all those files for one layer. And if you have several layers, the number of files is large. It's not ideal to have ~4-6x the number of files for each of your project.

- Shapefile supports related attributes data similar to a tabular dataset with column headers. But you can only have ten characters to define the column header, and it is not always ideal to have an abbreviated form of column headers where some description/identification is necessary on column headers.

- The shapefiles have a maximum size of 2GB. You can't export a vector layer with more features that may exceed the 2GB as a shapefile.

- The shapefiles can't have more than one geometry type in a file.

- As the size of the shapefile increases and as you deal with more attribute columns & rows, the performance of the shapefile drastically reduces and it becomes sluggish even with a spatial index on QGIS.

Problems with GeoJSONs

GeoJSONs were in part created to address the multiple files problem with the shapefiles. Built as an extension of JSON objects used on the web applications, it did solve a few of the issues shapefiles posed. But it has its own set of limitations;

- For a similar number of vector features with attributes, GeoJSON has almost double the file size compared to shapefile in most cases.

- GeoJSONs have no spatial indexing. So, it's tough to handle when dealing with a large number of features. And just panning around the spatial features to explore on a QGIS map canvas is a tiresome task most of the times.

- Whenever you load the files to run some tasks, the entire file is loaded onto the memory at once, and this might create problems in several scenarios, especially with large files.

- Also, the loading of the files is usually slower compared to shapefile and geopackages, but the memory consumption is similar or more.

- If the file size exceeds some limit (~10-11 GB in my experience), the features might get written incompletely, hence making the file corrupt.

What's GeoPackage?

Developed by OGC as an open format for geospatial information, they define the GeoPackage as below;

GeoPackage is an open, standards-based, platform-independent, portable, self-describing, compact format for transferring geospatial information.

A geopackage, in essence, is an SQLite container with OGC encoding standards for storing vector features, tile matrix (raster data), non-spatial attributes data and extensions.

By default, each of the geopackage files has few meta tables like below to understand and handle the geospatial layers.

Advantages

- Open source, based on SQLite database

- Very lightweight but highly compatible across across environments (esp. in mobile devices where connectivity & bandwidth is limited)

- Geopackages are generally ~1.1-1.3x lighter in file size compared to shapefiles and almost 2x lighter with respect to geojsons.

- Since the vector layers in geopackage are inherently rtree indexed (spatial indexing), loading file on QGIS or making queries on the file database is fast.

- There is no limit on the file size and it can handle large number of features in a smaller file size.

- Compared to shapefiles, the column headers can be full names and right by providing the correct context for each column.

- You will see a faster run and algorithm outputs on geopackages compared to shapefiles (You can try this on QGIS).

- A single geopackage file can have multiple vector layers with each layer having a different geometry type.

- You can have non-spatial attribute tables (pandas tables) as well along with vector layers.

- We regularly deal with making changes to the vector layers as the data is updated. And loading and editing the features of geopackage files on QGIS or python is faster.

- The file can be handled using GDAL, QGIS, Python, R, SQLite and Postgres (with few limitations on each mode)

- Adding and loading the geopackage to a Postgres database is much faster compared to shapefile or geojson (which takes forever with some larger datasets) since it's already a database format and spatially indexed (compared to shapefile or geojson).

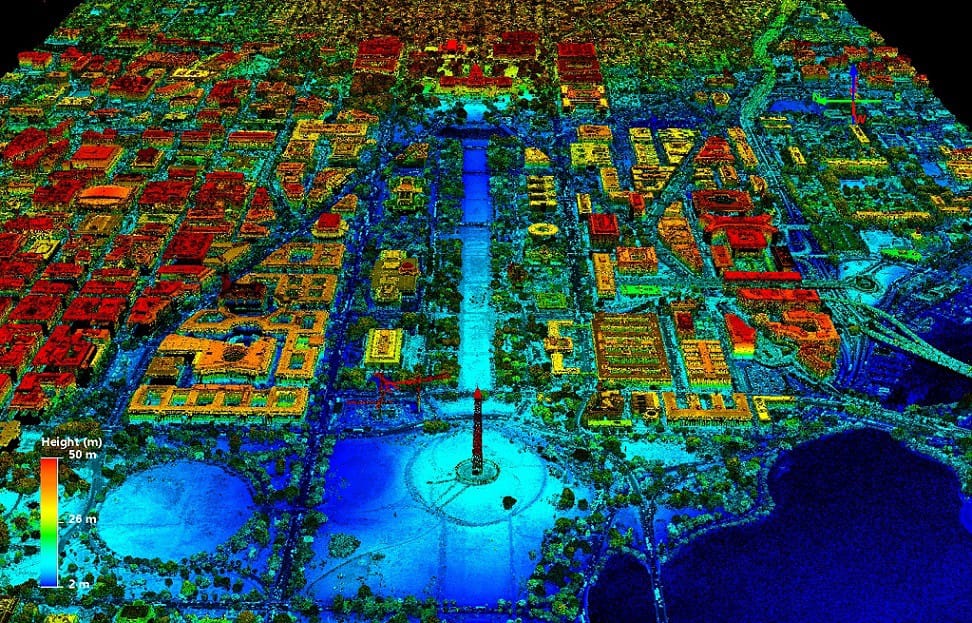

- Interestingly, geopackages can also handle rasters as a tile matrix. (of course, there are some limitations to this.)

How can one use it in their Workflow?

We understood the advantages of using geopackage files compared to the shapefiles and GeoJSONs. But how and to what extent can we integrate the geopackage files in our spatial analysis workflows? Let's explore few options.

Large Output Files

Of course, as I mentioned earlier, since the geopackage is a relatively lighter file format with spatial indexing, you can use it with large datasets with millions of features and multiple attribute columns. Because of its database-like capabilities, you can perform some of the file handling operations with ease.

Tiled Tables / Multi-layer Files

Let's say you are generating vector layers for all the states of India, and each state has millions of features. In this case, you can have each state data as a separate layer in the same geopackage file, basically reducing the number of files and with the benefit of smaller file sizes compared to shapefile and geojson. (although, it's not ideal to have all the vector layers of a project in one single file)

Reduce/Avoid Redundant Files for Outputs

In most cases where data teams deal with spatial datasets, there's always an attached csv or excel file attached. It is for a quick view of the attributes without loading the heavier geometry columns or specifically opening the vector file supported software. It creates a repository of redundant files in the storage. There are multiple files of the same data without the associated geometry columns. Since geopackage support non-spatial tables, you can use it to reduce the number of files by having a non-spatial data master table within the file corresponding to the vector layer.

- file.csv and file.shp (.dbf, .cpg, .prj, .shx)

- file.csv and file.geojson

Geopackage can have non-spatial table to avoid an extra csv file.

Spatial Views

Another cool feature of geopackage that can help save the file sizes is spatial views. One particular dataset with attribute columns can have multiple geometry types.

For example, a village dataset can have both the village boundary polygon layer with the centroid point location layer of each village. In both the vector layers, except for the geometry, all the other columns identifying the village remains the same, like name, district, state, village code etc.

With spatial views, you can reduce the file size by creating saved SQL queries that you can use to access data from multiple tables within a geopackage. These SQL queries are run on the go while visualizing these particular spatial views (and rendered if on QGIS).

You can create a spatial view on geopackage by simply inserting an sql query to the geopackage file such as below;

Load only Parts of Vector Layer onto Memory

Since the geopackage file is SQLite based, you can read only part of data from layers on the geopackage file using an SQL query. It's possible to apply few functions as well on geometries.

Geopandas in Python can handle file operations on geopackage, but it loads the entire vector layer from the file. Therefore, I wrote a simple python module called GPAL (Geopackage Python Access Library) to treat the geopackage as an SQLite database and read features based on SQL queries.

(The module is still in development stages and it can handle only few of the operations as of now.)

Reading with SQLite3 python library;

Reading with the GPAL Python library with query filter on the vector layer;

Handling Geography Masters

When dealing with administrative datasets, we usually have vector layers that we can treat as geography masters. For example, for the US, the vector file containing all the counties with their boundary geometry and attributes such as name, county code, the state is a geography master. And all the datasets that linked to these counties, such as population counts, industry counts, revenue, tax data etc., that utilize county master data are derived vector layers.

In such cases, geopackage are ideally suited, which can handle both spatial and non-spatial tables. You can keep a data master with all the attributes as the default table/layer. And all the other layers can be created as table views by keeping the primary key identifiers and the data columns.

For example, In the earlier given example of geopackage file india_villages_master_2011.gpkg;

- data_master file will have all the attributes.

- village_points layer has village code (primary key) and point geometries.

- voronoi_polygons layer has village code (primary key) and voronoi geometries.

- spatial views view_india_village_points and view_india_village_voronoi will be the views created after joining master attributes to geometries.

The new india_villages_master_2011.gpkg, which had master attributes for more than 600K villages along with point, and Voronoi geometries was 460MB in file size. Whereas the original village_points shapefile was 520MB & village_voronoi shapefile was 820MB with a csv file of 110MB (without the geometry) for India village master.

Work In Progress (WIP) Layers in One File

When you are running a workflow with several data inputs and intermediary steps before the output, you'll have intermediate files. These (WIP) files are necessary for debugging after generating the outputs. These files can be of use by themselves in other processes.

But, through the workflow, there will be several files generated. And you can lose track of them, or they might clutter the folders of your project. There will be multiple versions, shapefile dependencies, csv and vector files of the same data. Even slight changes go into a new file, with each having similar extensions.

One efficient way can be to store all those intermediate layers with geopackage format as a multi-table file. Also, instead of keeping all attributes in all WIP layers, you can have a data_master table and take primary keys in the subsequent WIP layers, and you can later create spatial/non-spatial views to fetch joined tables.

CSV with all attributes are large in size and adding a geometry column and making it a geojson would only increase the file size.

This way we can have fewer files and a much organised intermediate files for any project.

File imports for CartoDB

Carto has been one of the commonly used platforms for creating map dashboard visualizations on the web. You can upload a geopackage file similar to shapefile or geojson to add as a dataset. If your geopackage has multiple layers, each layer gets added as a new dataset with filename_layername format for the layer names.

Samples, Default Colour Styles & other Attributes

Another handy feature of geopackage is that it can save the layer styles you used to visualize on QGIS. For each layer, you can save a default layer style. So, when you load the layer on QGIS again, it automatically loads the colour style and other layer attributes.

GPAL

GPAL (Geopackage Python Access Library) is a library I developed to address a few limitations when using geopandas to read and handle geopackage files in Python. Few of the features of the geopackage format I mentioned above doesn't have methods on Python. Therefore I started with building a module.

At the moment, the module can read and handle the file operations on geopackage files with SQL queries similar to a sqlite3 module on an SQLite database. It helps in loading only parts of vector data onto memory instead of the whole layer. And currently, I'm working on a few other features as well.

What's Planned?

- Since geopackage handles both spatial and non-spatial tables, a method to process both these data tables consistently from the python module is necessary.

- The table view is a feature of databases. And since geopackage is based on SQLite, we can extend it to spatial views, as I mentioned above. But it involves handling the gpkg meta tables inside a geopackage file. And needs to be handled with a method of its own.

- Methods to handle the multi-layer format files in different workflows.

Geopackage is a very light and highly compatible vector file format. You can save a good amount of storage, deal with fewer files, run algorithms and visualize faster. Being an open-source format with continuous updates is the icing on the cake. All its current & potential features inspired me to take up the GPAL project to develop something I hope to add more versatility to using geopackage on Python.

I hope this article helped you understand the features and advantages of geopackage over other vector file formats. I’d be happy to receive any suggestions to improve this further.

If you liked this blog, subscribe to the blog and get notified about future blog posts. You can find me on LinkedIn, Twitter for any queries or discussions. Check out my previous blog on how to use QGIS spatial algorithms with python scripts here.

Some Conversions

Using GDAL from command line

- Convert a shapefile to geopackage

- All the files (shapefile/geopackage) will be added to one geopackage.

- Add geopackage to postgres database

Do you like our stuff? Subscribe now.

You may also like

.jpg)